We’ve predicted doom before yet technology saved us; how the pace of human innovation often surprises us humans & thoughts on AI, energy use, fusion and more.

Using AI's projected energy use as an example, time to discuss the intersection of technology innovation and predicting it. Humans are pretty good at the former and bad at the latter.

As ever, there is a lot of news coming down on the technology front. In this post though I want to focus on AI and its energy needs (plus touch on fusion), as it is a good example of how hard it is to predict how technology will shape out over time. (For the record, this is not an article on if AI works or not, or if it is a good thing or not - only on how we can use it to view general technology change over time.) Coverage of AI and energy tends towards the doomerism angle and I understand why. There’s this new technology, it is being touted as hugely disruptive and often not in a good way. Opinions vary widely and I see why. However, here I want to look at how bad we are in that role as predictors of what is to come, because we don’t know what we don’t yet know.

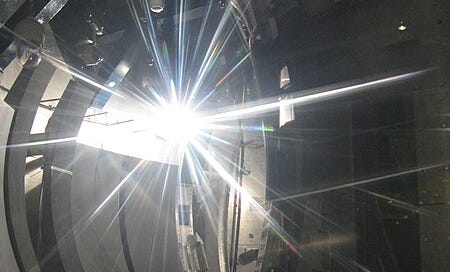

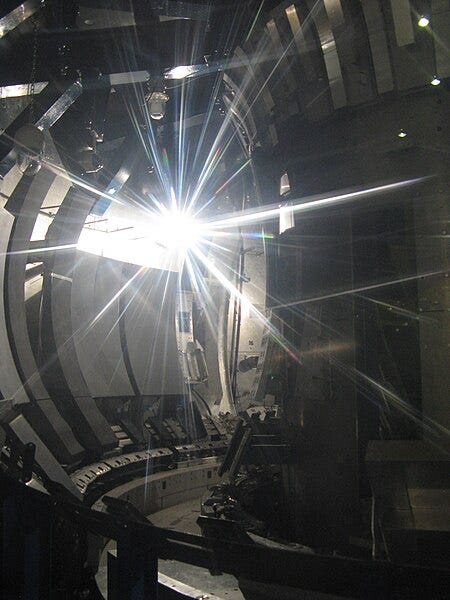

(Image - Lens flare on the plasma vessel of the Joint European Torus (JET) Tokamak in Culham. Fusion is a technology now interecting with that of AI. Source.)

Going back into history to frame this topic we find plenty of great examples. One relevant here is that of Thomas Malthus. In 1798 he looked at the growing population, looked at the amount of food being produced and concluded that doom lay ahead and that, bluntly, we should let people starve. Because feeding poor people led to them having babies and so even more poor people to feed. He was looking at the data, as it stood, fairly accurately. He saw the rising population, knew the amount of food being made and projected both forwards as-is and prducted a point of crisis.

(Image: Graph sowing the principle of Malthus’ theory of population. Graph by Jan Oosthoek, source)

However what he wasn’t able to project was that in the future, things would be different to how they are now. What he got wrong was that the population growth would level out and that - key for our discussion here - the rate of innovation in food production would accelerate and allow far more production than he thought possible. There was no doom as he predicted it.

But we didn’t really learn from Malthus’ misunderstandings. The same argument was made in the late 1960s, the most well known version being the book ‘The Population Bomb’ which argued (with data) that the human population was growing too fast and we could not feed all these new people and that, “...hundreds of millions of people will starve to death.” Except that didn’t happen. There was no doom as they predicted it. Yes there were famines and yes there was hunger but the technological innovations of the Green Revolution, the 1960s-1980s series of farming and agricultural initiatives that saw a huge increase in food production, meant that overall more humans had more calories then before.( Again at note; this post is not an analysis of these events, good and the bad, but is here to show the same point; the vast underestimation of the pace and impact of technological change.)

Which brings us back to AI and energy where the assumptions were that growing energy demands from AI and growing use of AI would lead to doom. Here’s a New York Times headline example from July 2024; “The soaring electricity demands of data”. Yet some outlets urged caution on this news trend, back in April 2024 Heatmap News was a rare voice urging caution in those assumptions;

In a 1999 Forbes article, a pair of conservative lawyers, Peter Huber and Mark Mills, warned that personal computers and the internet were about to overwhelm the fragile U.S. grid. Information technology already devoured 8% to 13% of total U.S. power demand, Huber and Mills claimed, and that share would only rise over time. “It’s now reasonable to project,” they wrote, “that half of the electric grid will be powering the digital-Internet economy within the next decade.” They could not have been more wrong. In fact, Huber and Mills had drastically mismeasured the amount of electricity used by PCs and the internet. Computing ate up perhaps 3% of total U.S. electricity in 1999, not the roughly 10% they had claimed. And instead of staring down a period of explosive growth, the U.S. electric grid was in reality facing a long stagnation. (Bold emphasis mine.)

After story after story about the steady increase in the cost and power demands of AI, in January this year a small Chinese start-up, DeepSeek, smashed all those assumptions by producing a model that works with comparable ability to others yet was trained needing a fraction of the computing power of past models. Shares in energy companies fell on the news as the markets reacted to the upheaval. We need to be skeptical of projections:

But there’s an important caveat that is almost never mentioned, let alone emphasized, in all the discourse about AI and the explosion in electricity demand: These are all just forecasts, predictions, informed guesses about the future…. But the confidence most people make their predictions with is what I’m most skeptical about—mainly because humans are so bad at predicting the future.

After all, as well as being wrong about food production, our projections were also wrong about other technologies including lithium-ion batteries, LED lights and solar power. On solar power projections, by 2019 actual power generated by solar outstripped what had been projected back in 2010 by the IEA. We achieved a rate of production that is 16 years ahead of projections. 2024’s actual solar output was 3x what was projected back then.

Let's also look at LED lights:

“In 2010, LED lights were installed in less than 1% of sockets. They cost $40 per bulb. Then the cost fell to as little as $2 per bulb and they became one of the most quickly adopted new technologies in history.”

(Image - Blue LED strip light by Maksym Kozlenko, source.)

Now this does not mean that there are no longer any worries about the energy cost of AI; that is still a worry because of the aforementioned uncertainty. This article does a good job of explaining the various routes that AI, climate and energy may go. The TL;DR is that it’s complex and we just don’t know.

You’ll hear people mention the ‘Jevons Paradox’ for example. This is where a new breakthrough means a reduced resource use of something, which you’d expect would lead to less resources now needed for it. Yet it has the opposite effect as it then gets used in more places and more people, because of that reduced resource cost. Resulting in a net effect can mean the higher overall resources being used.

But the Jevons Paradox didn’t work well for LEDs which decreased lighting power consumption even as more people used LEDs. The Jevons Paradox may, or may not, apply to AI? We just don’t know.

Then, as I’m doing research for this article - to underscore this uncertainty - someone then claimed they have recreated the core functions of DeepSeek for just $30. Not $30 million, $30.

To those who are bullish on what AI might be able to do, cite the argument that its power can help solve some of the technological challenges we need to overcome to make the next generation of power technology, like fusion. They may be wrong, but they may also be very right. I’ve covered fusion before here, but what is key to note is that there have been a huge range of technological and scientific innovations over the last decade or two that have gradually chipped away at the wall of difficulty we need to overcome to make this technology usable. Things are changing fast and here is an example of AI helping to chip away at that wall;

Nuclear fusion reactors, often referred to as artificial sun, rely on maintaining a high-temperature plasma environment similar to that of the sun. … Accurately predicting the collisions between these particles is crucial for sustaining a stable fusion reaction. … "By utilizing deep learning on GPUs, we have reduced computation time [of these projections] by a factor of 1,000 compared to traditional CPU-based codes," the joint research team stated.

The key takeaways here are that we get the prediction of technology wrong time and time again. One of the constants of humanity is innovation and it has been increasing in pace and breadth over time. These innovations are, yes technological, but also intellectual and cultural too. So we’re creating new things, new ideas and new ways they mesh and get used. That is not to say that all innovation is good - it is clearly a mixed bag. But it is happening and while we’re not helpless in the face of change, we’re not good at predicting it and any second-order effects and onwards. Indeed, as well as innovating technologies, we innovate ideas that help us to shape them. Democracy for example allows (in theory anyway!) the ability for people to shape rules for how technology is developed and deployed by the people.

Three technologies connected to climate change that are debated (rightly) are AI, fusion and carbon capture. We’ve already been wrong on renewables - we will be wrong on other technologies also - the question is in what way will be wrong; in a good way or in a bad way?

I think there is more of the good way to come.

PS. Don’t forget the new ‘Points of Action’ section of this project with ideas for things you can do to help the fight against climate chaos.

I also liked this and tink/hope that you are right, though I fear it will be a near-run thing in terms of holding climate change to civilisation-survivable levels.

Much as I support voluntary simplicity and things like permaculture (I did a PDC with David Holmgren himself and respectfully partially-disagree with some of his predictions in the nonetheless useful Retrosuburbia) I think it's simply not possible for our current systems and populations to fold back into an energy decline. I fear we either keep things afloat (while still striving mightily to simplify, frugality and focus on improved efficiency) or the whole great machine will blow up in our faces.

My only enduring fear, though, is that we may be on a kind of treadmill that we can never get off...

Great article thank you!